Computer viruses can be a nightmare. Some can wipe out the

information on a hard drive, tie up traffic on a computer network for

hours, turn an innocent machine into a zombie

and replicate and send themselves to other computers. If you've never

had a machine fall victim to a computer virus, you may wonder what the

fuss is about. But the concern is understandable -- according to

Consumer Reports, computer viruses helped contribute to $8.5 billion in

consumer losses in 2008 [source: MarketWatch]. Computer viruses are just one kind of online threat, but they're arguably the best known of the bunch.

Computer viruses have been around for many years. In fact, in 1949, a scientist named John von Neumann theorized that a self-replicated program was possible [source: Krebs]. The computer industry wasn't even a decade old, and already someone had figured out how to throw a monkey wrench into the figurative gears. But it took a few decades before programmers known as hackers began to build computer viruses.

While some pranksters created virus-like programs for large computer systems, it was really the introduction of the personal computer that brought computer viruses to the public's attention. A doctoral student named Fred Cohen was the first to describe self-replicating programs designed to modify computers as viruses. The name has stuck ever since.

In the good old days (i.e., the early 1980s), viruses depended on humans to do the hard work of spreading the virus to other computers. A hacker would save the virus to disks and then distribute the disks to other people. It wasn't until modems became common that virus transmission became a real problem. Today when we think of a computer virus, we usually imagine something that transmits itself via the Internet. It might infect computers through e-mail messages or corrupted Web links. Programs like these can spread much faster than the earliest computer viruses.

We're going to take a look at 10 of the worst computer viruses to cripple a computer system. Let's start with the Melissa virus.

In the spring of 1999, a man named David L. Smith created a computer virus based on a Microsoft Word macro. He built the virus so that it could spread through e-mail messages. Smith named the virus "Melissa," saying that he named it after an exotic dancer from Florida [source: CNN].

Rather than shaking its moneymaker, the Melissa computer virus tempts recipients into opening a document with an e-mail message like "Here is that document you asked for, don't show it to anybody else." Once activated, the virus replicates itself and sends itself out to the top 50 people in the recipient's e-mail address book.

The virus spread rapidly after Smith unleashed it on the world. The United States federal government became very interested in Smith's work -- according to statements made by FBI officials to Congress, the Melissa virus "wreaked havoc on government and private sector networks" [source: FBI]. The increase in e-mail traffic forced some companies to discontinue e-mail programs until the virus was contained.

After a lengthy trial process, Smith lost his case and received a 20-month jail sentence. The court also fined Smith $5,000 and forbade him from accessing computer networks without court authorization [source: BBC]. Ultimately, the Melissa virus didn't cripple the Internet, but it was one of the first computer viruses to get the public's attention.

A year after the Melissa virus hit the Internet, a digital menace emerged from the Philippines. Unlike the Melissa virus, this threat came in the form of a worm -- it was a standalone program capable of replicating itself. It bore the name ILOVEYOU.

The ILOVEYOU virus initially traveled the Internet by e-mail, just like the Melissa virus. The subject of the e-mail said that the message was a love letter from a secret admirer. An attachment in the e-mail was what caused all the trouble. The original worm had the file name of LOVE-LETTER-FOR-YOU.TXT.vbs. The vbs extension pointed to the language the hacker used to create the worm: Visual Basic Scripting [source: McAfee].

According to anti-virus software producer McAfee, the ILOVEYOU virus had a wide range of attacks:

Now that the love fest is over, let's take a look at one of the most widespread viruses to hit the Web.

Computer viruses have been around for many years. In fact, in 1949, a scientist named John von Neumann theorized that a self-replicated program was possible [source: Krebs]. The computer industry wasn't even a decade old, and already someone had figured out how to throw a monkey wrench into the figurative gears. But it took a few decades before programmers known as hackers began to build computer viruses.

While some pranksters created virus-like programs for large computer systems, it was really the introduction of the personal computer that brought computer viruses to the public's attention. A doctoral student named Fred Cohen was the first to describe self-replicating programs designed to modify computers as viruses. The name has stuck ever since.

In the good old days (i.e., the early 1980s), viruses depended on humans to do the hard work of spreading the virus to other computers. A hacker would save the virus to disks and then distribute the disks to other people. It wasn't until modems became common that virus transmission became a real problem. Today when we think of a computer virus, we usually imagine something that transmits itself via the Internet. It might infect computers through e-mail messages or corrupted Web links. Programs like these can spread much faster than the earliest computer viruses.

We're going to take a look at 10 of the worst computer viruses to cripple a computer system. Let's start with the Melissa virus.

In the spring of 1999, a man named David L. Smith created a computer virus based on a Microsoft Word macro. He built the virus so that it could spread through e-mail messages. Smith named the virus "Melissa," saying that he named it after an exotic dancer from Florida [source: CNN].

Rather than shaking its moneymaker, the Melissa computer virus tempts recipients into opening a document with an e-mail message like "Here is that document you asked for, don't show it to anybody else." Once activated, the virus replicates itself and sends itself out to the top 50 people in the recipient's e-mail address book.

The virus spread rapidly after Smith unleashed it on the world. The United States federal government became very interested in Smith's work -- according to statements made by FBI officials to Congress, the Melissa virus "wreaked havoc on government and private sector networks" [source: FBI]. The increase in e-mail traffic forced some companies to discontinue e-mail programs until the virus was contained.

After a lengthy trial process, Smith lost his case and received a 20-month jail sentence. The court also fined Smith $5,000 and forbade him from accessing computer networks without court authorization [source: BBC]. Ultimately, the Melissa virus didn't cripple the Internet, but it was one of the first computer viruses to get the public's attention.

Flavors of Viruses

In this article, we'll look at several different kinds of computer viruses. Here's a quick guide to what we'll see:- The general term computer virus usually covers programs that modify how a computer works (including damaging the computer) and can self-replicate. A true computer virus requires a host program to run properly -- Melissa used a Word document.

- A worm, on the other hand, doesn't require a host program. It's an application that can replicate itself and send itself through computer networks.

- Trojan horses are programs that claim to do one thing but really do another. Some might damage a victim's hard drive. Others can create a backdoor, allowing a remote user to access the victim's computer system.

A year after the Melissa virus hit the Internet, a digital menace emerged from the Philippines. Unlike the Melissa virus, this threat came in the form of a worm -- it was a standalone program capable of replicating itself. It bore the name ILOVEYOU.

The ILOVEYOU virus initially traveled the Internet by e-mail, just like the Melissa virus. The subject of the e-mail said that the message was a love letter from a secret admirer. An attachment in the e-mail was what caused all the trouble. The original worm had the file name of LOVE-LETTER-FOR-YOU.TXT.vbs. The vbs extension pointed to the language the hacker used to create the worm: Visual Basic Scripting [source: McAfee].

According to anti-virus software producer McAfee, the ILOVEYOU virus had a wide range of attacks:

- It copied itself several times and hid the copies in several folders on the victim's hard drive.

- It added new files to the victim's registry keys.

- It replaced several different kinds of files with copies of itself.

- It sent itself through Internet Relay Chat clients as well as e-mail.

- It downloaded a file called WIN-BUGSFIX.EXE from the Internet and executed it. Rather than fix bugs, this program was a password-stealing application that e-mailed secret information to the hacker's e-mail address.

Now that the love fest is over, let's take a look at one of the most widespread viruses to hit the Web.

The Klez virus marked a new direction for computer viruses,

setting the bar high for those that would follow. It debuted in late

2001, and variations of the virus plagued the Internet for several

months. The basic Klez worm infected a victim's computer through an e-mail

message, replicated itself and then sent itself to people in the

victim's address book. Some variations of the Klez virus carried other

harmful programs that could render a victim's computer inoperable.

Depending on the version, the Klez virus could act like a normal

computer virus, a worm or a Trojan horse. It could even disable

virus-scanning software and pose as a virus-removal tool [source: Symantec].

Shortly after it appeared on the Internet, hackers modified the Klez virus in a way that made it far more effective. Like other viruses, it could comb through a victim's address book and send itself to contacts. But it could also take another name from the contact list and place that address in the "From" field in the e-mail client. It's called spoofing -- the e-mail appears to come from one source when it's really coming from somewhere else.

Spoofing an e-mail address accomplishes a couple of goals. For one thing, it doesn't do the recipient of the e-mail any good to block the person in the "From" field, since the e-mails are really coming from someone else. A Klez worm programmed to spam people with multiple e-mails could clog an inbox in short order, because the recipients would be unable to tell what the real source of the problem was. Also, the e-mail's recipient might recognize the name in the "From" field and therefore be more receptive to opening it.

The Code Red and Code Red II worms popped up in the summer of 2001. Both worms exploited an operating system vulnerability that was found in machines running Windows 2000 and Windows NT. The vulnerability was a buffer overflow problem, which means when a machine running on these operating systems receives more information than its buffers can handle, it starts to overwrite adjacent memory.

The original Code Red worm initiated a distributed denial of service (DDoS) attack on the White House. That means all the computers infected with Code Red tried to contact the Web servers at the White House at the same time, overloading the machines.

A Windows 2000 machine infected by the Code Red II worm no longer obeys the owner. That's because the worm creates a backdoor into the computer's operating system, allowing a remote user to access and control the machine. In computing terms, this is a system-level compromise, and it's bad news for the computer's owner. The person behind the virus can access information from the victim's computer or even use the infected computer to commit crimes. That means the victim not only has to deal with an infected computer, but also may fall under suspicion for crimes he or she didn't commit.

While Windows NT machines were vulnerable to the Code Red worms, the viruses' effect on these machines wasn't as extreme. Web servers running Windows NT might crash more often than normal, but that was about as bad as it got. Compared to the woes experienced by Windows 2000 users, that's not so bad.

Microsoft released software patches that addressed the security vulnerability in Windows 2000 and Windows NT. Once patched, the original worms could no longer infect a Windows 2000 machine; however, the patch didn't remove viruses from infected computers -- victims had to do that themselves.

Shortly after it appeared on the Internet, hackers modified the Klez virus in a way that made it far more effective. Like other viruses, it could comb through a victim's address book and send itself to contacts. But it could also take another name from the contact list and place that address in the "From" field in the e-mail client. It's called spoofing -- the e-mail appears to come from one source when it's really coming from somewhere else.

Spoofing an e-mail address accomplishes a couple of goals. For one thing, it doesn't do the recipient of the e-mail any good to block the person in the "From" field, since the e-mails are really coming from someone else. A Klez worm programmed to spam people with multiple e-mails could clog an inbox in short order, because the recipients would be unable to tell what the real source of the problem was. Also, the e-mail's recipient might recognize the name in the "From" field and therefore be more receptive to opening it.

Antivirus Software

It's important to have an antivirus program on your computer, and to keep it up to date. But you shouldn't use more than one suite, as multiple antivirus programs can interfere with one another. Here's a list of some antivirus software suites:- Avast Antivirus

- AVG Anti-Virus

- Kaspersky Anti-Virus

- McAfee VirusScan

- Norton AntiVirus

The Code Red and Code Red II worms popped up in the summer of 2001. Both worms exploited an operating system vulnerability that was found in machines running Windows 2000 and Windows NT. The vulnerability was a buffer overflow problem, which means when a machine running on these operating systems receives more information than its buffers can handle, it starts to overwrite adjacent memory.

The original Code Red worm initiated a distributed denial of service (DDoS) attack on the White House. That means all the computers infected with Code Red tried to contact the Web servers at the White House at the same time, overloading the machines.

A Windows 2000 machine infected by the Code Red II worm no longer obeys the owner. That's because the worm creates a backdoor into the computer's operating system, allowing a remote user to access and control the machine. In computing terms, this is a system-level compromise, and it's bad news for the computer's owner. The person behind the virus can access information from the victim's computer or even use the infected computer to commit crimes. That means the victim not only has to deal with an infected computer, but also may fall under suspicion for crimes he or she didn't commit.

While Windows NT machines were vulnerable to the Code Red worms, the viruses' effect on these machines wasn't as extreme. Web servers running Windows NT might crash more often than normal, but that was about as bad as it got. Compared to the woes experienced by Windows 2000 users, that's not so bad.

Microsoft released software patches that addressed the security vulnerability in Windows 2000 and Windows NT. Once patched, the original worms could no longer infect a Windows 2000 machine; however, the patch didn't remove viruses from infected computers -- victims had to do that themselves.

Another virus to hit the Internet

in 2001 was the Nimda (which is admin spelled backwards) worm. Nimda

spread through the Internet rapidly, becoming the fastest propagating

computer virus at that time. In fact, according to TruSecure CTO Peter

Tippett, it only took 22 minutes from the moment Nimda hit the Internet

to reach the top of the list of reported attacks [source: Anthes].

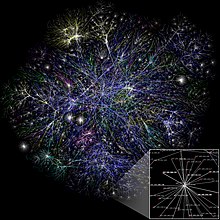

The Nimda worm's primary targets were Internet servers. While it could infect a home PC, its real purpose was to bring Internet traffic to a crawl. It could travel through the Internet using multiple methods, including e-mail. This helped spread the virus across multiple servers in record time.

The Nimda worm created a backdoor into the victim's operating system. It allowed the person behind the attack to access the same level of functions as whatever account was logged into the machine currently. In other words, if a user with limited privileges activated the worm on a computer, the attacker would also have limited access to the computer's functions. On the other hand, if the victim was the administrator for the machine, the attacker would have full control.

The spread of the Nimda virus caused some network systems to crash as more of the system's resources became fodder for the worm. In effect, the Nimda worm became a distributed denial of service (DDoS) attack.

In late January 2003, a new Web server virus spread across the Internet. Many computer networks were unprepared for the attack, and as a result the virus brought down several important systems. The Bank of America's ATM service crashed, the city of Seattle suffered outages in 911 service and Continental Airlines had to cancel several flights due to electronic ticketing and check-in errors.

The culprit was the SQL Slammer virus, also known as Sapphire. By some estimates, the virus caused more than $1 billion in damages before patches and antivirus software caught up to the problem [source: Lemos]. The progress of Slammer's attack is well documented. Only a few minutes after infecting its first Internet server, the Slammer virus was doubling its number of victims every few seconds. Fifteen minutes after its first attack, the Slammer virus infected nearly half of the servers that act as the pillars of the Internet [source: Boutin].

The Slammer virus taught a valuable lesson: It's not enough to make sure you have the latest patches and antivirus software. Hackers will always look for a way to exploit any weakness, particularly if the vulnerability isn't widely known. While it's still important to try and head off viruses before they hit you, it's also important to have a worst-case-scenario plan to fall back on should disaster strike.

For the most part, that's true. Mac computers are partially protected from virus attacks because of a concept called security through obscurity. Apple has a reputation for keeping its operating system (OS) and hardware a closed system -- Apple produces both the hardware and the software. This keeps the OS obscure. Traditionally, Macs have been a distant second to PCs in the home computer market. A hacker who creates a virus for the Mac won't hit as many victims as he or she would with a virus for PCs.

But that hasn't stopped at least one Mac hacker. In 2006, the Leap-A virus, also known as Oompa-A, debuted. It uses the iChat instant messaging program to propagate across vulnerable Mac computers. After the virus infects a Mac, it searches through the iChat contacts and sends a message to each person on the list. The message contains a corrupted file that appears to be an innocent JPEG image.

The Leap-A virus doesn't cause much harm to computers, but it does show that even a Mac computer can fall prey to malicious software. As Mac computers become more popular, we'll probably see more hackers create customized viruses that could damage files on the computer or snarl network traffic. Hodgman's character may yet have his revenge.

We're down to the end of the list. What computer virus has landed the number one spot?

campaign where Justin "I'm a Mac" Long consoles John "I'm a PC" Hodgman. Hodgman comes down with a virus and points out that there are more than 100,000 viruses that can strike a computer. Long says that those viruses target PCs, not Mac computers.

For the most part, that's true. Mac computers are partially protected from virus attacks because of a concept called security through obscurity. Apple has a reputation for keeping its operating system (OS) and hardware a closed system -- Apple produces both the hardware and the software. This keeps the OS obscure. Traditionally, Macs have been a distant second to PCs in the home computer market. A hacker who creates a virus for the Mac won't hit as many victims as he or she would with a virus for PCs.

But that hasn't stopped at least one Mac hacker. In 2006, the Leap-A virus, also known as Oompa-A, debuted. It uses the iChat instant messaging program to propagate across vulnerable Mac computers. After the virus infects a Mac, it searches through the iChat contacts and sends a message to each person on the list. The message contains a corrupted file that appears to be an innocent JPEG image.

The Leap-A virus doesn't cause much harm to computers, but it does show that even a Mac computer can fall prey to malicious software. As Mac computers become more popular, we'll probably see more hackers create customized viruses that could damage files on the computer or snarl network traffic. Hodgman's character may yet have his revenge.

We're down to the end of the list. What computer virus has landed the number one spot?

The latest virus

on our list is the dreaded Storm Worm. It was late 2006 when computer

security experts first identified the worm. The public began to call the

virus the Storm Worm because one of the e-mail

messages carrying the virus had as its subject "230 dead as storm

batters Europe." Antivirus companies call the worm other names. For

example, Symantec calls it Peacomm while McAfee refers to it as Nuwar.

This might sound confusing, but there's already a 2001 virus called the

W32.Storm.Worm. The 2001 virus and the 2006 worm are completely

different programs.

The Storm Worm is a Trojan horse program. Its payload is another program, though not always the same one. Some versions of the Storm Worm turn computers into zombies or bots. As computers become infected, they become vulnerable to remote control by the person behind the attack. Some hackers use the Storm Worm to create a botnet and use it to send spam mail across the Internet.

Many versions of the Storm Worm fool the victim into downloading the application through fake links to news stories or videos. The people behind the attacks will often change the subject of the e-mail to reflect current events. For example, just before the 2008 Olympics in Beijing, a new version of the worm appeared in e-mails with subjects like "a new deadly catastrophe in China" or "China's most deadly earthquake." The e-mail claimed to link to video and news stories related to the subject, but in reality clicking on the link activated a download of the worm to the victim's computer [source: McAfee].

Several news agencies and blogs named the Storm Worm one of the worst virus attacks in years. By July 2007, an official with the security company Postini claimed that the firm detected more than 200 million e-mails carrying links to the Storm Worm during an attack that spanned several days [source: Gaudin]. Fortunately, not every e-mail led to someone downloading the worm.

Although the Storm Worm is widespread, it's not the most difficult virus to detect or remove from a computer system. If you keep your antivirus software up to date and remember to use caution when you receive e-mails from unfamiliar people or see strange links, you'll save yourself some major headaches.

The Nimda worm's primary targets were Internet servers. While it could infect a home PC, its real purpose was to bring Internet traffic to a crawl. It could travel through the Internet using multiple methods, including e-mail. This helped spread the virus across multiple servers in record time.

The Nimda worm created a backdoor into the victim's operating system. It allowed the person behind the attack to access the same level of functions as whatever account was logged into the machine currently. In other words, if a user with limited privileges activated the worm on a computer, the attacker would also have limited access to the computer's functions. On the other hand, if the victim was the administrator for the machine, the attacker would have full control.

The spread of the Nimda virus caused some network systems to crash as more of the system's resources became fodder for the worm. In effect, the Nimda worm became a distributed denial of service (DDoS) attack.

Phoning it In

Not all computer viruses focus on computers. Some target other electronic devices. Here's just a small sample of some highly portable viruses:- CommWarrior attacked smartphones running the Symbian operating system (OS).

- The Skulls Virus also attacked Symbian phones and displayed screens of skulls instead of a home page on the victims' phones.

- RavMonE.exe is a virus that could infect iPod MP3 devices made between Sept. 12, 2006, and Oct. 18, 2006.

- Fox News reported in March 2008 that some electronic gadgets leave the factory with viruses pre-installed -- these viruses attack your computer when you sync the device with your machine [source: Fox News].

In late January 2003, a new Web server virus spread across the Internet. Many computer networks were unprepared for the attack, and as a result the virus brought down several important systems. The Bank of America's ATM service crashed, the city of Seattle suffered outages in 911 service and Continental Airlines had to cancel several flights due to electronic ticketing and check-in errors.

The culprit was the SQL Slammer virus, also known as Sapphire. By some estimates, the virus caused more than $1 billion in damages before patches and antivirus software caught up to the problem [source: Lemos]. The progress of Slammer's attack is well documented. Only a few minutes after infecting its first Internet server, the Slammer virus was doubling its number of victims every few seconds. Fifteen minutes after its first attack, the Slammer virus infected nearly half of the servers that act as the pillars of the Internet [source: Boutin].

The Slammer virus taught a valuable lesson: It's not enough to make sure you have the latest patches and antivirus software. Hackers will always look for a way to exploit any weakness, particularly if the vulnerability isn't widely known. While it's still important to try and head off viruses before they hit you, it's also important to have a worst-case-scenario plan to fall back on should disaster strike.

A Matter of Timing

Some hackers program viruses to sit dormant on a victim's computer only to unleash an attack on a specific date. Here's a quick sample of some famous viruses that had time triggers:- The Jerusalem virus activated every Friday the 13th to destroy data on the victim computer's hard drive

- The Michelangelo virus activated on March 6, 1992 -- Michelangelo was born on March 6, 1475

- The Chernobyl virus activated on April 26, 1999 -- the 13th anniversary of the Chernobyl meltdown disaster

- The Nyxem virus delivered its payload on the third of every month, wiping out files on the victim's computer

The MyDoom (or Novarg) virus is another worm that can create a backdoor in the victim computer's operating system.

The original MyDoom virus -- there have been several variants -- had

two triggers. One trigger caused the virus to begin a denial of service

(DoS) attack starting Feb. 1, 2004. The second trigger commanded the

virus to stop distributing itself on Feb. 12, 2004. Even after the virus

stopped spreading, the backdoors created during the initial infections

remained active [source: Symantec].

Later that year, a second outbreak of the MyDoom virus gave several search engine companies grief. Like other viruses, MyDoom searched victim computers for e-mail addresses as part of its replication process. But it would also send a search request to a search engine and use e-mail addresses found in the search results. Eventually, search engines like Google began to receive millions of search requests from corrupted computers. These attacks slowed down search engine services and even caused some to crash [source: Sullivan].

MyDoom spread through e-mail and peer-to-peer networks. According to the security firm MessageLabs, one in every 12 e-mail messages carried the virus at one time [source: BBC]. Like the Klez virus, MyDoom could spoof e-mails so that it became very difficult to track the source of the infection.

Next, we'll take a look at a pair of viruses created by the same hacker: the Sasser and Netsky viruses.

Sometimes computer virus programmers escape detection. But once in a while, authorities find a way to track a virus back to its origin. Such was the case with the Sasser and Netsky viruses. A 17-year-old German named Sven Jaschan created the two programs and unleashed them onto the Internet. While the two worms behaved in different ways, similarities in the code led security experts to believe they both were the work of the same person.

The Sasser worm attacked computers through a Microsoft Windows vulnerability. Unlike other worms, it didn't spread through e-mail. Instead, once the virus infected a computer, it looked for other vulnerable systems. It contacted those systems and instructed them to download the virus. The virus would scan random IP addresses to find potential victims. The virus also altered the victim's operating system in a way that made it difficult to shut down the computer without cutting off power to the system.

The Netsky virus moves through e-mails and Windows networks. It spoofs e-mail addresses and propagates through a 22,016-byte file attachment [source: CERT]. As it spreads, it can cause a denial of service (DoS) attack as systems collapse while trying to handle all the Internet traffic. At one time, security experts at Sophos believed Netsky and its variants accounted for 25 percent of all computer viruses on the Internet [source: Wagner].

Sven Jaschan spent no time in jail; he received a sentence of one year and nine months of probation. Because he was under 18 at the time of his arrest, he avoided being tried as an adult in German courts.

So far, most of the viruses we've looked at target PCs running Windows. But Macintosh computers aren't immune to computer virus attacks. In the next section, we'll take a look at the first virus to commit a Mac attack.

Maybe you've seen the ad in Apple's Mac computer marketing campaign

where Justin "I'm a Mac" Long consoles John "I'm a PC" Hodgman. Hodgman

comes down with a virus and points out that there are more than 100,000 viruses that can strike a computer. Long says that those viruses target PCs, not Mac computers.Later that year, a second outbreak of the MyDoom virus gave several search engine companies grief. Like other viruses, MyDoom searched victim computers for e-mail addresses as part of its replication process. But it would also send a search request to a search engine and use e-mail addresses found in the search results. Eventually, search engines like Google began to receive millions of search requests from corrupted computers. These attacks slowed down search engine services and even caused some to crash [source: Sullivan].

MyDoom spread through e-mail and peer-to-peer networks. According to the security firm MessageLabs, one in every 12 e-mail messages carried the virus at one time [source: BBC]. Like the Klez virus, MyDoom could spoof e-mails so that it became very difficult to track the source of the infection.

Oddball Viruses

Not all viruses cause severe damage to computers or destroy networks. Some just cause computers to act in odd ways. An early virus called Ping-Pong created a bouncing ball graphic, but didn't seriously damage the infected computer. There are several joke programs that might make a computer owner think his or her computer is infected, but they're really harmless applications that don't self-replicate. When in doubt, it's best to let an antivirus program remove the application.Next, we'll take a look at a pair of viruses created by the same hacker: the Sasser and Netsky viruses.

Sometimes computer virus programmers escape detection. But once in a while, authorities find a way to track a virus back to its origin. Such was the case with the Sasser and Netsky viruses. A 17-year-old German named Sven Jaschan created the two programs and unleashed them onto the Internet. While the two worms behaved in different ways, similarities in the code led security experts to believe they both were the work of the same person.

The Sasser worm attacked computers through a Microsoft Windows vulnerability. Unlike other worms, it didn't spread through e-mail. Instead, once the virus infected a computer, it looked for other vulnerable systems. It contacted those systems and instructed them to download the virus. The virus would scan random IP addresses to find potential victims. The virus also altered the victim's operating system in a way that made it difficult to shut down the computer without cutting off power to the system.

The Netsky virus moves through e-mails and Windows networks. It spoofs e-mail addresses and propagates through a 22,016-byte file attachment [source: CERT]. As it spreads, it can cause a denial of service (DoS) attack as systems collapse while trying to handle all the Internet traffic. At one time, security experts at Sophos believed Netsky and its variants accounted for 25 percent of all computer viruses on the Internet [source: Wagner].

Sven Jaschan spent no time in jail; he received a sentence of one year and nine months of probation. Because he was under 18 at the time of his arrest, he avoided being tried as an adult in German courts.

So far, most of the viruses we've looked at target PCs running Windows. But Macintosh computers aren't immune to computer virus attacks. In the next section, we'll take a look at the first virus to commit a Mac attack.

For the most part, that's true. Mac computers are partially protected from virus attacks because of a concept called security through obscurity. Apple has a reputation for keeping its operating system (OS) and hardware a closed system -- Apple produces both the hardware and the software. This keeps the OS obscure. Traditionally, Macs have been a distant second to PCs in the home computer market. A hacker who creates a virus for the Mac won't hit as many victims as he or she would with a virus for PCs.

But that hasn't stopped at least one Mac hacker. In 2006, the Leap-A virus, also known as Oompa-A, debuted. It uses the iChat instant messaging program to propagate across vulnerable Mac computers. After the virus infects a Mac, it searches through the iChat contacts and sends a message to each person on the list. The message contains a corrupted file that appears to be an innocent JPEG image.

The Leap-A virus doesn't cause much harm to computers, but it does show that even a Mac computer can fall prey to malicious software. As Mac computers become more popular, we'll probably see more hackers create customized viruses that could damage files on the computer or snarl network traffic. Hodgman's character may yet have his revenge.

We're down to the end of the list. What computer virus has landed the number one spot?

campaign where Justin "I'm a Mac" Long consoles John "I'm a PC" Hodgman. Hodgman comes down with a virus and points out that there are more than 100,000 viruses that can strike a computer. Long says that those viruses target PCs, not Mac computers.

For the most part, that's true. Mac computers are partially protected from virus attacks because of a concept called security through obscurity. Apple has a reputation for keeping its operating system (OS) and hardware a closed system -- Apple produces both the hardware and the software. This keeps the OS obscure. Traditionally, Macs have been a distant second to PCs in the home computer market. A hacker who creates a virus for the Mac won't hit as many victims as he or she would with a virus for PCs.

But that hasn't stopped at least one Mac hacker. In 2006, the Leap-A virus, also known as Oompa-A, debuted. It uses the iChat instant messaging program to propagate across vulnerable Mac computers. After the virus infects a Mac, it searches through the iChat contacts and sends a message to each person on the list. The message contains a corrupted file that appears to be an innocent JPEG image.

The Leap-A virus doesn't cause much harm to computers, but it does show that even a Mac computer can fall prey to malicious software. As Mac computers become more popular, we'll probably see more hackers create customized viruses that could damage files on the computer or snarl network traffic. Hodgman's character may yet have his revenge.

We're down to the end of the list. What computer virus has landed the number one spot?

Breaking into Song

While computer viruses can pose a serious threat to computer systems and Internet traffic, sometimes the media overstates the impact of a particular virus. For example, the Michelangelo virus gained a great deal of media attention, but the actual damage caused by the virus was pretty small. That might have been the inspiration for the song "Virus Alert" by "Weird Al" Yankovic. The song warns listeners of a computer virus called Stinky Cheese that not only wipes out your computer's hard drive, but also forces you to listen to Jethro Tull songs and legally change your name to Reggie.The Storm Worm is a Trojan horse program. Its payload is another program, though not always the same one. Some versions of the Storm Worm turn computers into zombies or bots. As computers become infected, they become vulnerable to remote control by the person behind the attack. Some hackers use the Storm Worm to create a botnet and use it to send spam mail across the Internet.

Many versions of the Storm Worm fool the victim into downloading the application through fake links to news stories or videos. The people behind the attacks will often change the subject of the e-mail to reflect current events. For example, just before the 2008 Olympics in Beijing, a new version of the worm appeared in e-mails with subjects like "a new deadly catastrophe in China" or "China's most deadly earthquake." The e-mail claimed to link to video and news stories related to the subject, but in reality clicking on the link activated a download of the worm to the victim's computer [source: McAfee].

Several news agencies and blogs named the Storm Worm one of the worst virus attacks in years. By July 2007, an official with the security company Postini claimed that the firm detected more than 200 million e-mails carrying links to the Storm Worm during an attack that spanned several days [source: Gaudin]. Fortunately, not every e-mail led to someone downloading the worm.

Although the Storm Worm is widespread, it's not the most difficult virus to detect or remove from a computer system. If you keep your antivirus software up to date and remember to use caution when you receive e-mails from unfamiliar people or see strange links, you'll save yourself some major headaches.